Bagua-Net

Bagua-Net is a low level communication acceleration feature provided by Bagua. It can greatly improve the throughput of AllReduce on TCP network .

Technically, Bagua-Net is a plugin for NVIDIA NCCL communication library, the fastest generally avaiable GPU communication implementation now (2021). It replaces the TCP communication related logic in NCCL to greatly improve the communication performance, by improving the fairness between different streams and reducing the contentions between sockets.

By enabling Bagua-Net, the communication efficiency can be increased by 83% (code), and the end2end training throughput can be increased by 35%:

# VGG16 on 4x8xV100 NCCL default implementation

Running benchmark...

Iter #0: 2620.2 img/sec GPU

Iter #1: 2771.9 img/sec GPU

Iter #2: 2772.6 img/sec GPU

Iter #3: 2794.5 img/sec GPU

Iter #4: 2627.9 img/sec GPU

Iter #5: 2787.8 img/sec GPU

Iter #6: 2775.9 img/sec GPU

Iter #7: 2741.6 img/sec GPU

Iter #8: 2760.0 img/sec GPU

Iter #9: 2796.6 img/sec GPU

Img/sec per GPU: 85.8 +-3.8

Total img/sec on 32 GPU(s): 2744.9 +-122.3

# VGG16 on 4x8xV100 Bagua-Net enabled

Running benchmark...

Iter #0: 4081.0 img/sec GPU

Iter #1: 4072.0 img/sec GPU

Iter #2: 4106.4 img/sec GPU

Iter #3: 4081.7 img/sec GPU

Iter #4: 4064.8 img/sec GPU

Iter #5: 4122.1 img/sec GPU

Iter #6: 3857.7 img/sec GPU

Iter #7: 4128.3 img/sec GPU

Iter #8: 4125.5 img/sec GPU

Iter #9: 3826.6 img/sec GPU

Img/sec per GPU: 126.5 +-6.4

Total img/sec on 32 GPU(s): 4046.6 +-205.2

Quick Start

To enable Bagua-Net, you only need to pass the --enable-bagua-net argument in bagua.distributed.launch or bagua.distributed.run. No code change in your training script.

For example, with this distributed training example, you can launch the job with

python3 -m bagua.distributed.launch --enable-bagua-net \

--nproc_per_node=8 synthetic_benchmark.py --algorithm gradient_allreduce

It worth noting that you can even use

bagua.distributed.launchorbagua.distributed.runwith--enable-bagua-netargument to launch PyTorch-DDP jobs to improve the training throughput without migrating your code to Bagua.

Benchmark

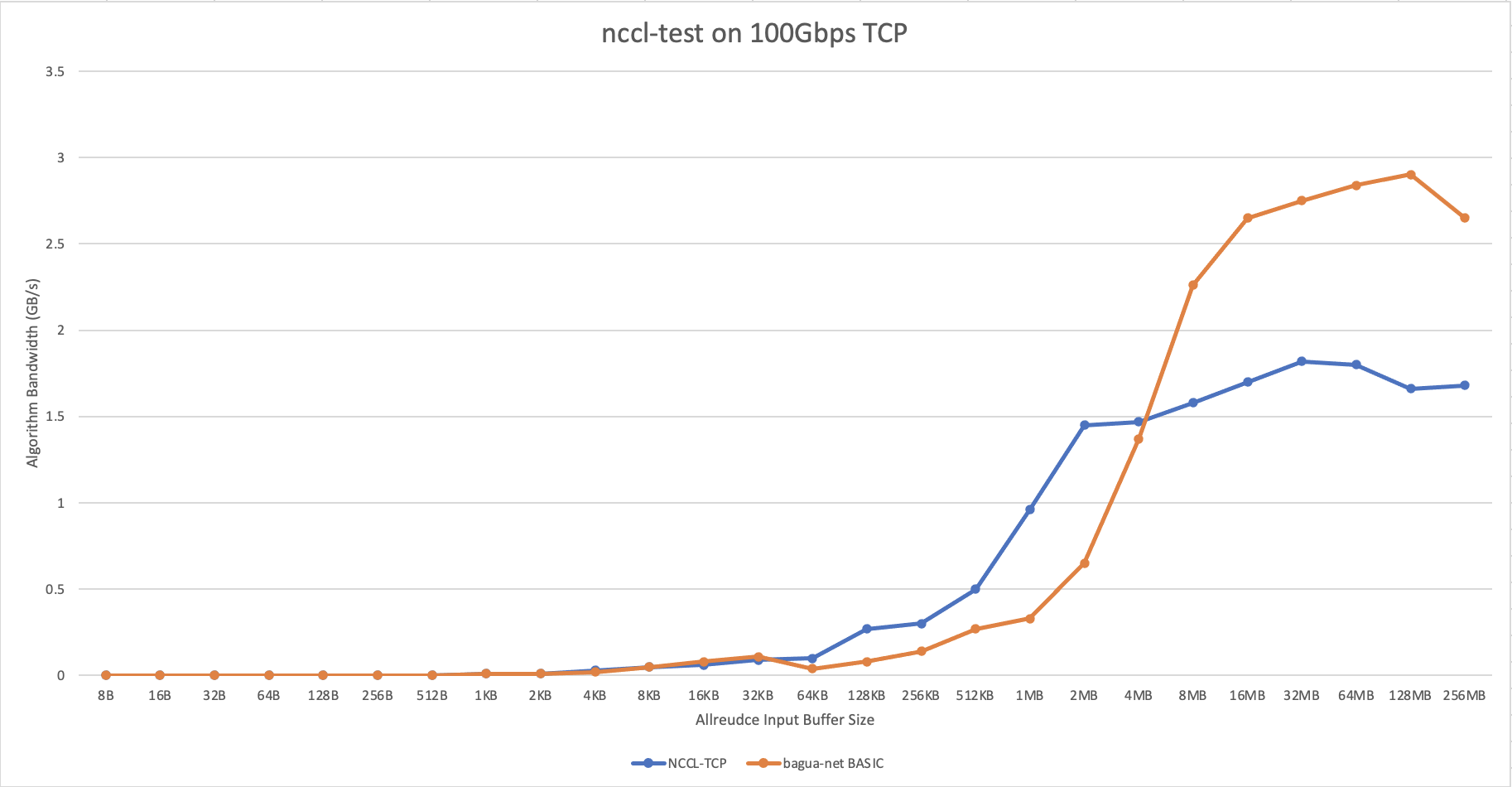

100G TCP network performance comparison with NCCL native implementation

Thanks to the tensor fusion of the communication library. The actual communication packets will be larger than 10MB. In this range, Bagua-Net has better performance than NCCL-TCP. I have also done some experiments. And when training a small module, Bagua-Net is no obvious worse than NCCL-TCP.

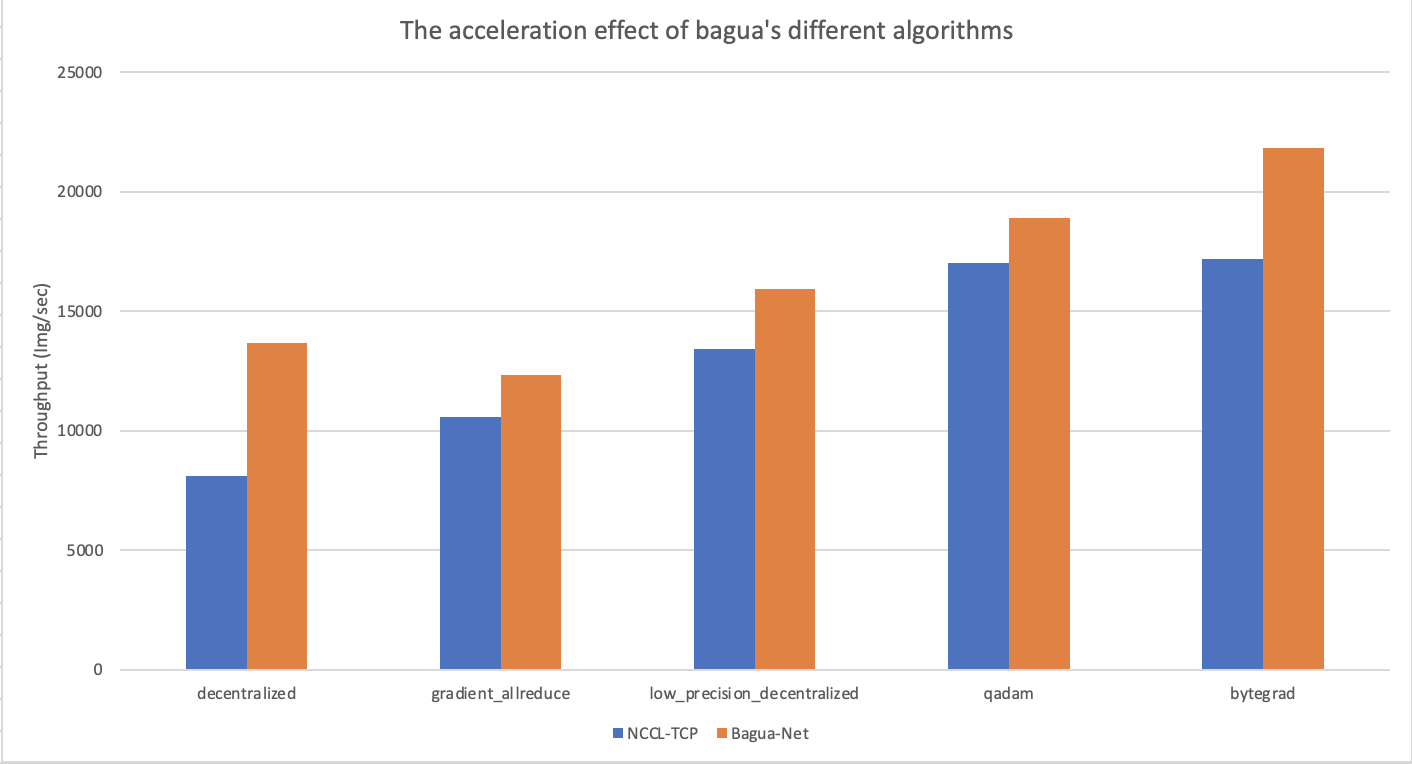

Effect on bagua algorithm

The data comes from the real 128 V100 ImageNet training. The throughput increase brought by Bagua-Net is 11% to 68%.